Metrics are here to stay, but our engagement with and use of them is evolving. In this post, we discuss:

- What are some of the issues in research assessment today?

- What developments are happening globally to address these?

- Questions about the use of the Impact Factor in assessing research quality

- Responsible metrics: industry initiatives including DORA and the Leiden Manifesto for Research Metrics

We would like to start a conversation on this topic – what are your views? Please direct them to your Portfolio Manager/Editorial contact to help inform our developments in this area.

Assessment and Impact: current challenges

Metrics serve a valuable role in helping us to navigate ever more articles and journals, in providing an objective measure, and in aiding comparison across journals or research outputs. However, they have also created an environment in which research and researchers are often judged not on the basis of a thorough and qualitative review of their work, but solely on the basis of metrics.

Although metrics should be used to *support* a qualitative review process, they are often substituted for qualitative review in order to speed up the process. Proxies for impact and quality are used in research assessment such as citations, numbers of publication and grant funding. In many cases, research that has societal impact, niche research, and researchers working to solve real world challenges don’t receive the credit that they deserve.

What developments are happening in response to these?

A need for reform has been recognized by researchers, institutions, funders and policymakers alike:

- The US National Science Foundation assesses grant applications on the basis of broader impact as well as intellectual merit.

- Rather than lengthy publication lists, Cancer Research UK (CRUK) asks grant applicants to list three to five ‘key achievements or contributions to science’, noting that research publications are just one of the outputs from research.

- Researcher assessment was a recurring topic at the recent event ‘The future of research: assessing the impact of Plan S’, with a consensus around the need to reform current assessment measures.

- Dutch institutions and funders have taken a noteworthy step recently, publishing their position paper championing a more holistic review of academic performance covering education and impact as well as research.

These are just a few examples of the cultural shift happening around the world in the way that impact is being measured, used and defined, and how outcomes are assessed.

What can the Impact Factor tell us about research quality?

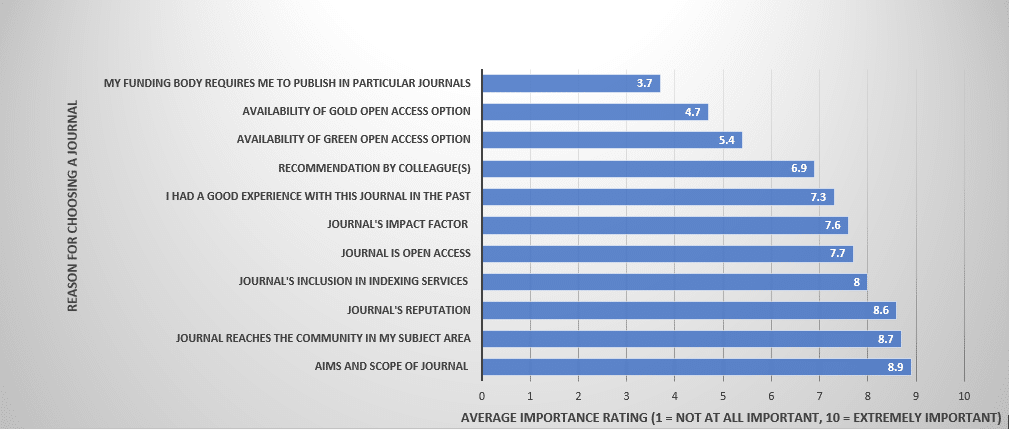

The Impact Factor (IF) is probably the best-known metric in scholarly communication fields. Many researchers still use the Impact Factor as an easily comprehensible signal of the quality and reliability of a journal. In our post publication survey about their reasons for submitting to a particular journal, Impact Factor still comes up as an important deciding factor.

Source T&F Author Survey, data from 2018 and 2019 respondents (to November 2019)

While the Impact Factor is a useful tool for helping reassure potential authors and readers about the quality of a journal and the reliability of its contents, research needs to be assessed on its own merits and the IF used alongside a range of metrics to tell the full story of impact. There are a number of disadvantages of using the Impact Factor alone, some of which are outlined in this Editor Resources post. As Vincent Larivière et al’s preprint article also notes, Impact Factors can be used for making comparisons between journals, but not for making comparisons between individual papers and their authors.

There are many communities already looking to articulate impact in a more holistic way than we might typically based just on citations. The HuMetricsHSS initiative for example is aimed at “…rethinking humane indicators of excellence in academia…” through the “…creation of a values-based evaluation framework.”

Moving towards more responsible metrics: industry initiatives

A range of cross-industry initiatives are emerging in response to some of the current challenges in research assessment, explained in more detail below:

The Declaration on Research Assessment (DORA)

DORA states that journal-level metrics should not to be used “as a surrogate measure of the quality of individual research articles, to assess an individual scientist’s contributions, or in hiring, promotion, or funding decisions”.

DORA outlines a set of best practice guidelines for institutions, funders, and publishers, including implementation of article-level metrics and, where journal-level metrics are employed, to present these “in the context of a variety of journal-based metrics…that provide a richer view of journal performance”.

Leiden Manifesto for Research Metrics

This comprises 10 principles outlining best practice around the uses of metrics. It doesn’t disavow quantitative measures, but notes that these should ‘support qualitative, expert assessment’, cautioning against ‘ced[ing] decision-making to the numbers’. The Manifesto also calls for metrics to be justified, contextualized, informed and responsible.

In the UK, the 2015 Metrics Tide report recommended five principles around responsible metrics, built around the principles of robustness, humility, transparency, diversity and reflexivity. Universities UK set up the UK Forum for Responsible Research Metrics and the LIS-Bibliometrics Committee has run an annual Responsible Metrics State-of-the-Art survey to analyze institutional engagement with raising awareness around and use of responsible metrics.

What is Taylor & Francis doing to engage on this?

We support the move towards responsible metrics and have a number of activities and initiatives already in place to help achieve this, including:

- Altmetric data available on Taylor & Francis Online for a number of years to help you and your authors understand the wider impact of research in the world. Policymakers, journalists, and the general public – you can use Altmetric to understand who is talking about the research published in your journal. Altmetric data is featured alongside a range of other metrics, including downloads and citations, helping to provide a broad look at impact.

- Data sharing policies (which encourage or mandate data sharing) help researchers get credit for their research outputs beyond just the final article.

- Investment in infrastructure development and creating richer metadata, including using persistent identifiers such as ORCID and the Open Funder Registry to allow for better tracking of funding and authorship.

- As one of the largest HSS (humanities and social sciences) publishers we’re looking at ways to use metrics in a holistic way.

We are currently exploring new ways of progressing this further including a review of current initiatives and activities. This includes whether we are in a position to sign up to DORA, and exploring how we can provide, in the words of DORA, “a richer overview of journal performance” on journal homepages.

We would like to start a conversation on this topic – what are your views? Please direct them to your Editorial contact to help inform our policies in this area.